f0rum: Community-driven Article Validation

As internet usage grows, so does the opportunity for misinformation to flow. If we want to solve important problems, we need to handle them appropriately. f0rum is one possible solution for doing so.

- Problem Statement 📝

- Product Description 🏛

- Existing Solutions 🚔🐦

- Possible Use Cases 👨💻

- Structured Transparency Aspect 💥

- Possible Pitfalls 📉❔

- Conclusion 💪

Problem Statement 📝

As the usage of the internet grows, so does the opportunity to share and access new information. This can be beneficial in many ways: you can learn new topics from experts, learn about happenings from around the whole world, how to solve problems that you thought were specific to your use case. Internet became a vast trove of all kinds of human knowledge and experiences.

On the other hand, along with the growth of internet usage, also grows the opportunity to share false information, and to a potentially massive audience. If left unchecked, these flows of misinformation can damage individuals, but also the whole society. It’s known that misinformation spreads faster on the internet. It also promotes anti-intellectualism, antiscience movements, and agnotology (deliberate propagation of ignorance). In other words, if we want to solve pressing problems like climate change, we need people to trust the science!

Product Description 🏛

Forums in Ancient Rome served the purpose of publicly sharing important information, educational events, and even elections among other things. Thus, they were a place where common people could gather, have fun, but also to learn reliable information. f0rum got its name by combining the ancient meaning of forums and modern technology (or rather binary numbers with “o”→ “0”)!

f0rum is an app for detecting untrue and fake articles by utilizing a community of experts, while also providing results of their voting to regular readers. The reason for employing experts instead of regular people is that the f0rum is meant to be used on articles that are scientific in their nature. Think about those articles about COVID-19 vaccines for example. It’d certainly be much easier if we knew which ones to trust.

The main goal of f0rum is to incentivize publishing valid, true scientific stories, but also provide an environment where readers become more educated, more (correctly) informed, and make better decisions in the long run.

This defines a clear value for the readers, but what about the experts? Experts tend to read pieces that interest them - which can more often than not, fall into their category of expertise. When that happens, they can vote on it. When that doesn’t happen, they can see other experts’ votings. In a sense, this creates a duality of roles for all experts - they are both the experts and regular readers.

Existing Solutions 🚔🐦

Two existing solutions that combat misinformation are Taiwan’s pol.is platform and Twitter’s Birdwatch project.

🐦 Birdwatch is Twitter’s community-driven approach to misinformation. People can post notes on a tweet and after a consensus is reached, other people can view those notes. f0rum differs from Birdwatch in three main ways:

- f0rum utilizes only experts to achieve more accurate results, while Birdwatch utilizes all people using Twitter

- f0rum keeps experts’ data private when it comes to voting, while Birdwatch freely shows notes and people that posted them

- f0rum works primarily for scientific articles, while Birdwatch’s main focus lies in political articles (because of Twitter’s nature)

🚔 Pol.is is Taiwan’s platform for analyzing and understanding what large groups of people think. Thus it’s mainly used to reach political consensus, create better regulations, but also to detect misinformation. Aside from this, Taiwan created an anti-misinformation environment. They did it by having classes about detecting misinformation as part of public education, but also by requiring state agencies to correct false claims. Even though Taiwan created such an environment offline, the main difference between f0rum and pol.is is not only exclusive employment of experts but also the voting system for articles which pol.is doesn’t have. If you’re interested in Taiwan’s amazing tech solutions, here’s an intriguing read:

1/In the midst of 195+ strategies for COVID-19, Taiwan has captured headlines for its speedy, effective response (<1K cases to date)

— Divya Siddarth (@divyasiddarth) January 23, 2021

But this barely scratches the surface of Taiwan's collective, democratic innovations. I discuss these in my latest piece: https://t.co/gitWJ26xb9 pic.twitter.com/GkAlIke46t

Possible Use Cases 👨💻

Two main use cases f0rum provides include verification of experts and verification of articles by voting.

Verification of experts proves very hard without testing the knowledge of every person. This would be a very slow, cumbersome, and expensive process. Instead, f0rum requires that experts have a Ph.D. in their field of expertise to be able to vote on the articles of the same field.

The second use case happens after an expert reads an article of his expertise and now has the ability to vote on if the article is valid or not. After a considerable amount of votes have been placed, the article will be shown to regular readers along with the consensus experts reached.

But how do these use cases actually work? And where does privacy-enhancing technology come in place? Let’s have a look!

Structured Transparency Aspect 💥

Verification of Experts 👩🔬✅

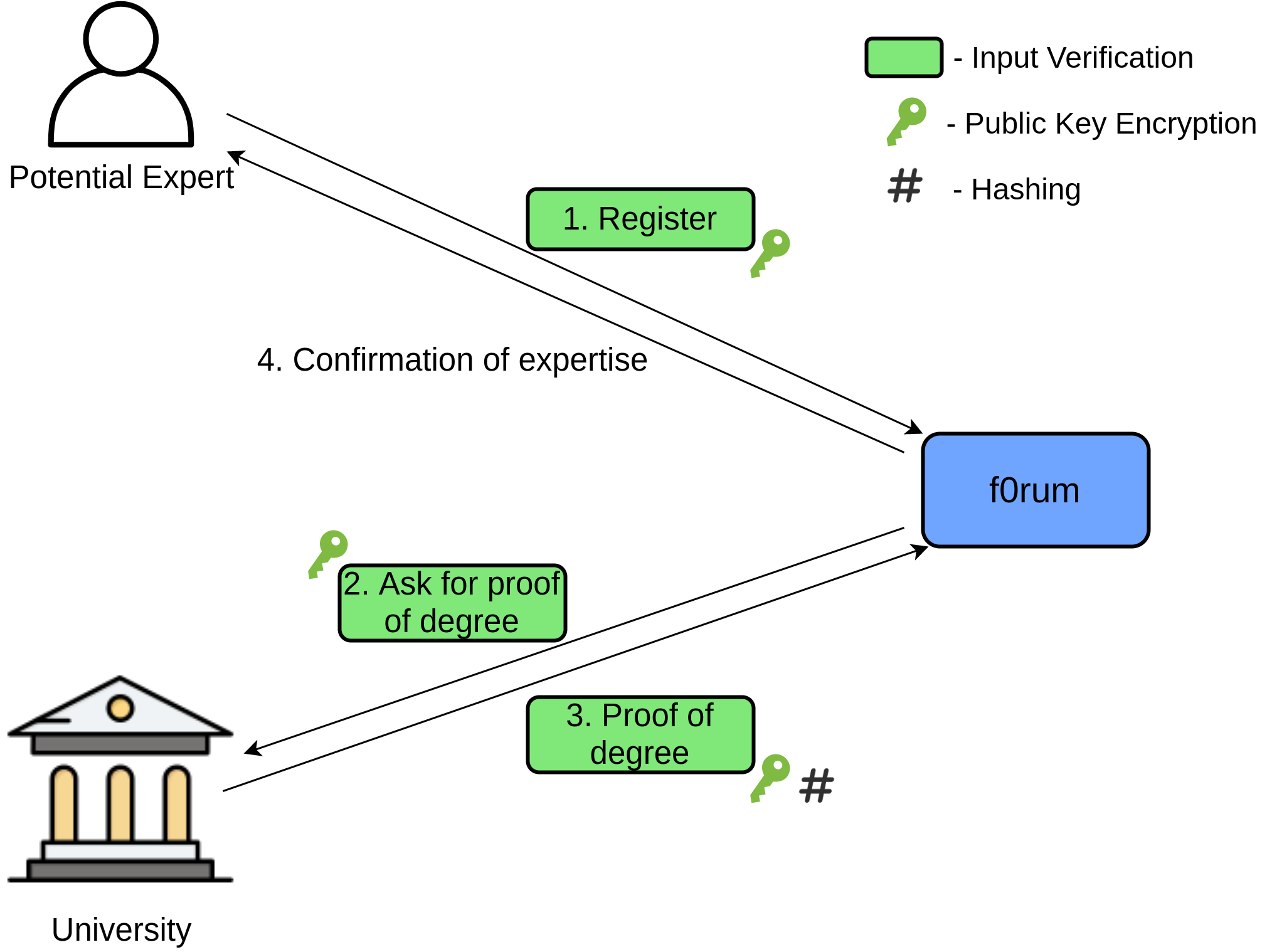

This Information Flow is constructed of 4 parts and occur in the following order:

- Expert registers on the f0rum, enters his field of expertise and university where he/she got the Ph.D. Input Verification, or rather a simple Public Key Encryption (PKE) is used in this flow as a way to verify that the person registering is exactly who he/she says he/she is.

- After that, f0rum asks the university for potential expert’s proof of degree (usually a transcript with a degree including a name and a field of expertise). This Information Flow uses PKE too, so the university can verify f0rum as the inquirer.

- University sends back the proof of degree to f0rum by using both Public Key Encryption and Hashing. PKE is used so that f0rum can verify the right university sent the proof, while also hashing the proof so f0rum can know if it was tempered with.

- Finally, potential expert becomes a verified one, and can vote on available articles.

Since all information goes through f0rum, a unilateral style of governance is employed here.

Verification of Articles 📰✅

This Information Flow and use case is the main one of f0rum. It’s meant to educate readers on which articles should or shouldn’t be trusted. Because this Information Flow is the most important one, it uses all aspects of Structured Transparency, and in the following way:

- Input Privacy: by using Public Key Encryption, Input Privacy can be easily achieved. An expert “signs” his vote with his private key, and then encrypts the vote with f0rum’s public key. Thus, each expert can vote on the validity of an article without worrying about others finding out what he voted for. On the other side, f0rum can decrypt the vote by using its private key and then verify which expert voted by using the expert’s public key.

- Output Privacy: since the voting result is an aggregate statistic (sum of all votes on all sides), Differential Privacy is just the right tool to reveal final results without also revealing individual votings.

- Input Verification: as mentioned previously, when an expert uses his private key to “sign” a vote, f0rum can use that to verify that the vote was sent from a trusted source (verified expert).

- Output Verification: used to verify the outcome of the voting. For example, that every expert that voted, voted only once, and that only verified experts voted.

- Flow Governance: since all information here flows between experts and f0rum, f0rum should be the actor that defines the Information Flow. But, experts are the ones with data! It’s a better practice to let them hold their data (instead of f0rum) so they decide for themselves what happens with their data afterward.

Possible Pitfalls 📉❔

Of course, f0rum isn’t perfect, far from it. Verification of experts, the scale of f0rum, and treating biased experts are just some of the possible problems. We’ll go through each of them here.

Verification of Experts 👨🔬

As mentioned previously, verification of experts is done via his/her university. This isn’t a fast solution, but a working one since only the university can provide proof of degree. Unfortunately, only experts with formal degrees like Ph.D. and MD degrees will be verified. And not all people with Ph.D. degrees are experts, and not all experts have a doctoral degree! For now, this is the only way to actually verify expertise without massively testing millions of potential experts which introduces new problems like cheating.

Scale of f0rum 🌍

Two problems tied to f0rum being on a nation-wide scale are lack of diverse experts in smaller countries and possibly having media curators as “experts” in communist countries. Both of these problems could be easily solved if f0rum was on a global-wide scale.

On the other hand, if f0rum was actually on a global-wide scale, verification of experts the same way as previously mentioned wouldn’t scale well. We’d have one central body (f0rum) collaborating with possibly all universities in the world on one task.

Treating Biased Experts 🤥

Biased experts are expected to show up on f0rum. For example, they’d use the app to “validate” articles by how their employers or even governments tell them to. Sadly, this is a huge problem in today’s society. It can affect the population’s trust in media, government, and even science as a result.

Since f0rum is a community-driven app, the problem of biased experts can be solved easily. The idea is based on the law of big numbers. It states that “as a sample size grows, its mean gets closer to the average of the whole population”. Thus, the bigger the community of experts, the more neutralized biased experts become!

Conclusion 💪

If we, as a society, want to move forward, to solve important problems, we’ll first need people to believe in science. Currently, the free flow of misinformation presents the main obstacle in achieving that.

f0rum was thought of as one possible solution. A solution that uses experts’ free time and knowledge to better educate a potentially huge number of people. And maybe, by doing so, we’ll achieve our goal, trust science and solve our most pressing problems, before it’s too late.